With the release of Rivet for Vercel Functions, you can now build WebSocket servers on Vercel & Next.js. Check out the getting started guide for Next.js or visit our GitHub.

One of the biggest limitations of many serverless platforms — including Vercel Functions — is the lack of support for WebSockets. This architecture choice makes sense for stateless applications, but it means that anything involving bi-directional communication needs to resort to alternative, more complicated solutions.

However, with the release of Rivet for Vercel Functions, you can now build WebSocket servers on Vercel Functions. This means that applications such as collaborative documents, chat rooms, local-first sync, really-really-long-running agents, pub/sub servers, and multiplayer games can now be deployed with Vercel Functions + Rivet.

What does this look like?

Before jumping into the details, a quick overview of how the API for WebSockets on Vercel Functions looks:

Rivet enables you to run code directly inside your Vercel Function, including support for frameworks like Next.js. This means that you can keep your deployment process, logs, and analytics all on Vercel without pulling in an external compute provider.

You have two options for implementing WebSockets:

For direct control over WebSocket connections, you can handle them with standard WebSocket APIs.

Registry (src/rivet/registry.ts)

API Route (app/api/rivet/[...all]/route.ts)

Client Component (app/components/ChatRoom.tsx)

The actions API provides a clean, type-safe way to handle WebSocket communication.

Registry (src/rivet/registry.ts)

API Route (app/api/rivet/[...all]/route.ts)

Client Component (app/components/ChatRoom.tsx)

More than just WebSockets

To those familiar with Socket.io or PartyKit, the above code probably rings a bell. Like these frameworks, Rivet enables multiple clients to connect to the same server instance, creating shared rooms or lobbies where all connected clients interact with the same running code and can communicate with each other through that single server.

That’s because Rivet provides WebSocket servers that allow multiple WebSockets to connect to the same “lobby” (what we call Rivet Actors).

This makes logic for anything collaborative significantly simpler. Instead of managing distributed state across multiple function invocations with databases and pub/sub servers, Rivet lets you write your logic as if it’s a single long-running server. All client connections — whether WebSockets, HTTP requests, or scheduled tasks — interact with the same actor instance, and Rivet handles routing them there automatically.

Rivet’s WebSocket implementation provides:

-

Addressable WebSocket “lobbies”: Route WebSockets to the same “lobby” by a unique string for the lobby

-

Outlive function execution timeouts: Rivet allows WebSockets to outlive function timeouts with live migrations

-

Multi-region edge support: Vercel Functions supports multiple edge regions for minimizing latency to your users, and so does Rivet

-

Vanilla WebSockets, no special protocol: Rivet is built on web standards, everything you read in this blog post can be done with a raw

WebSocketin your browser or Node.js -

Observability: Rivet provides robust debugging & observability tools to view all of your connections, WebSocket events, and active servers in realtime

The secret sauce

The part you came here to read — what dark arts did we use to pull this off? We built Rivet using a battle-tested pattern called “tunneling.”

What is tunneling?

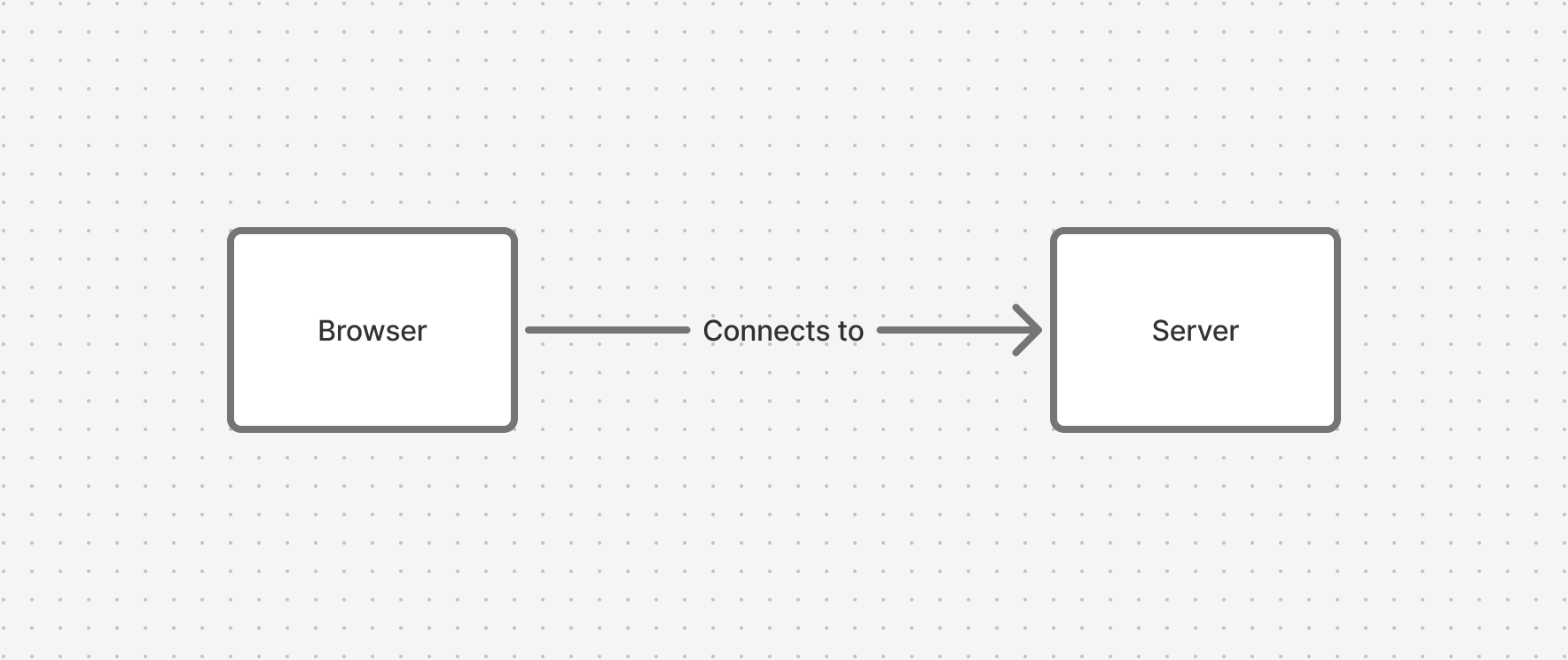

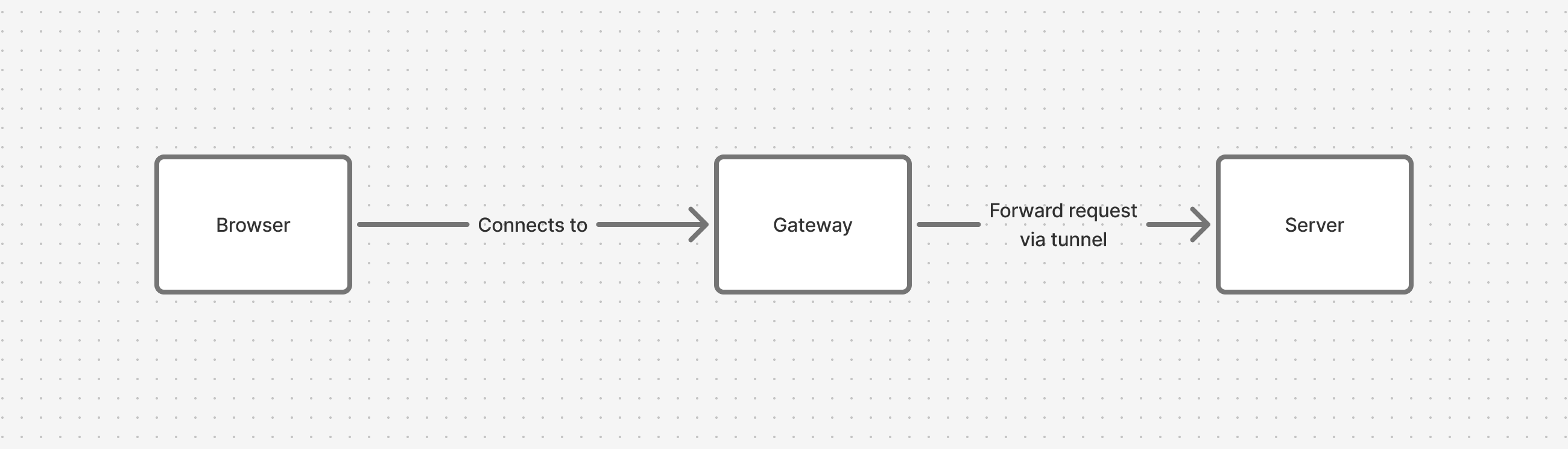

In traditional architectures, clients connect directly to a server’s open port. The connection model is straightforward — your browser knows the server’s address and connects to it:

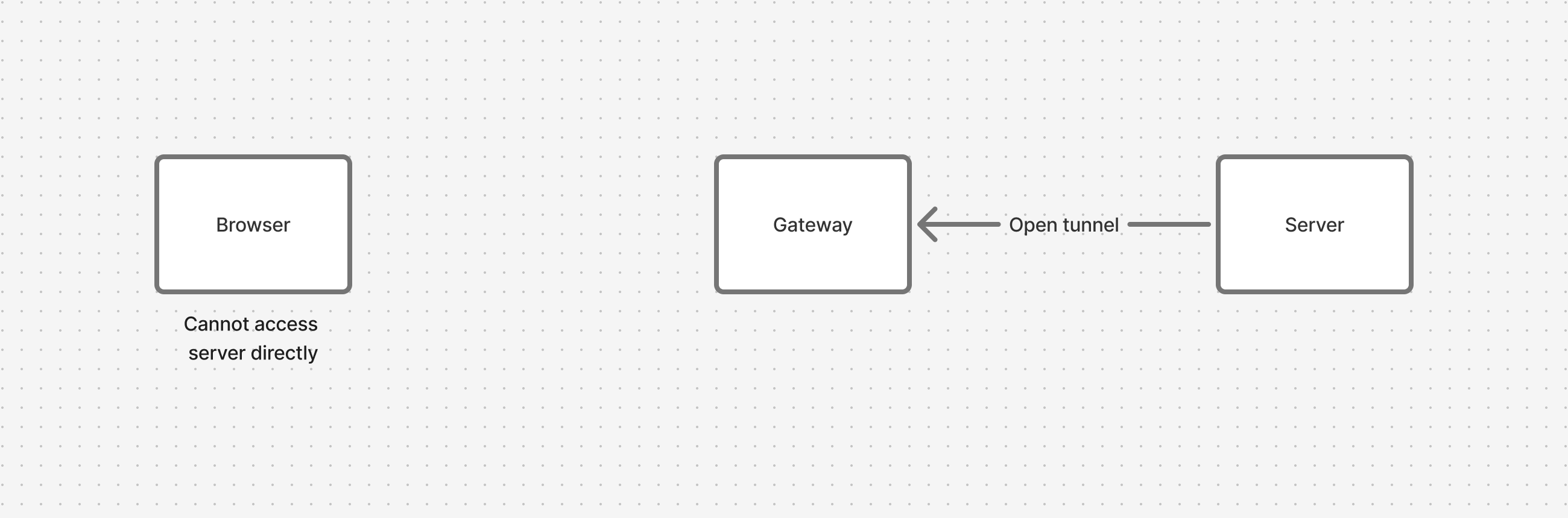

Tunneling flips this model. Instead of waiting for incoming connections, the server opens an outbound connection to a gateway. This works for environments where the server has no open ports and can only make outbound connections — the gateway is publicly accessible on the internet, but the server itself doesn’t need to expose anything.

Step 1: Server opens tunnel to gateway

The server establishes an outbound connection to the gateway. This connection stays open and will be used to receive all incoming traffic.

Step 2: Browser connects via gateway

When a browser wants to connect to the server, it connects to the gateway instead. The gateway then forwards all traffic through the already-open tunnel to the server. This works because the server only needs the ability to make outbound connections, not accept inbound ones. From the browser’s perspective, it’s talking to a normal server — it has no idea there’s a tunnel involved:

The key detail: the server never needs to accept inbound connections, it only needs to maintain an outbound one.

If you’ve used Tailscale, Wireguard, VS Code’s shared servers or port forwarding, Ngrok, Cloudflare Tunnels, or SSH tunnels, you’ve used a form of tunneling before.

How does tunneling apply to Vercel Functions and WebSockets?

Here’s the fun part: tunnels let us forward WebSocket connections to Vercel Functions that can only make outbound requests. (We can even forward TCP & UDP traffic! But alas, I must stay on topic.)

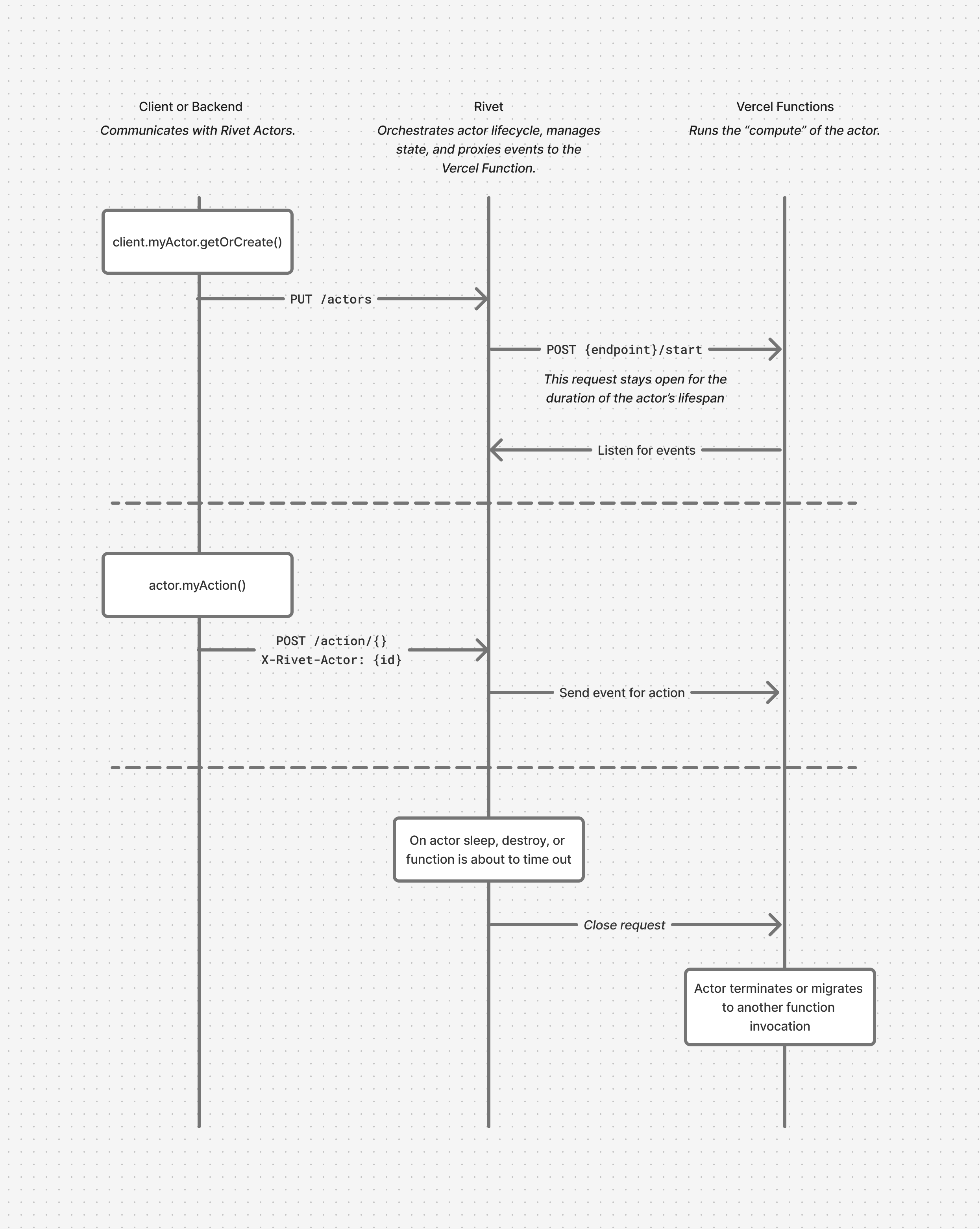

Let’s walk through how this works. At a high level, the architecture looks like this:

- Vercel Functions act as a server with a tunnel open to Rivet

- Rivet acts as the gateway that forwards requests to the tunnel

- The browser opens a WebSocket to Rivet like it’s a normal server

In this architecture, each Vercel Function invocation runs exactly one actor instance. This 1:1 mapping changes how we think about function calls: instead of handling a single HTTP request, each function becomes a “WebSocket lobby” that can handle multiple WebSockets. Our implementation allows multiple WebSockets to connect to the same Vercel Function, all interacting with the same actor.

Here’s how it works step-by-step:

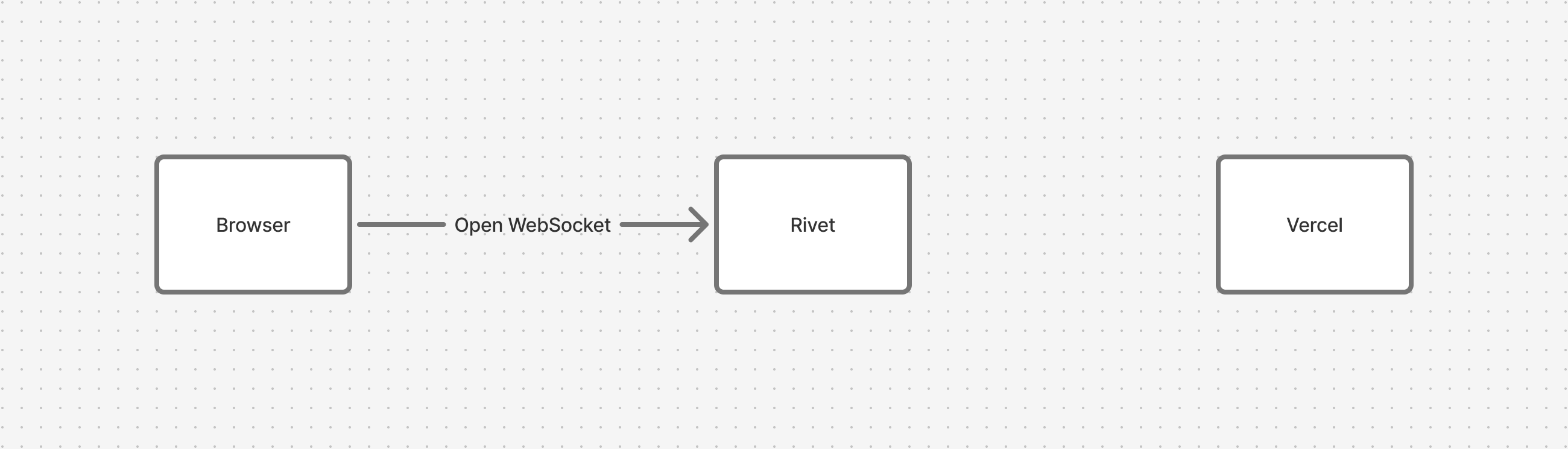

Step 1: Browser opens a WebSocket

The user’s browser initiates a WebSocket connection to Rivet (acting as the gateway). At this point, no Vercel Function is running yet.

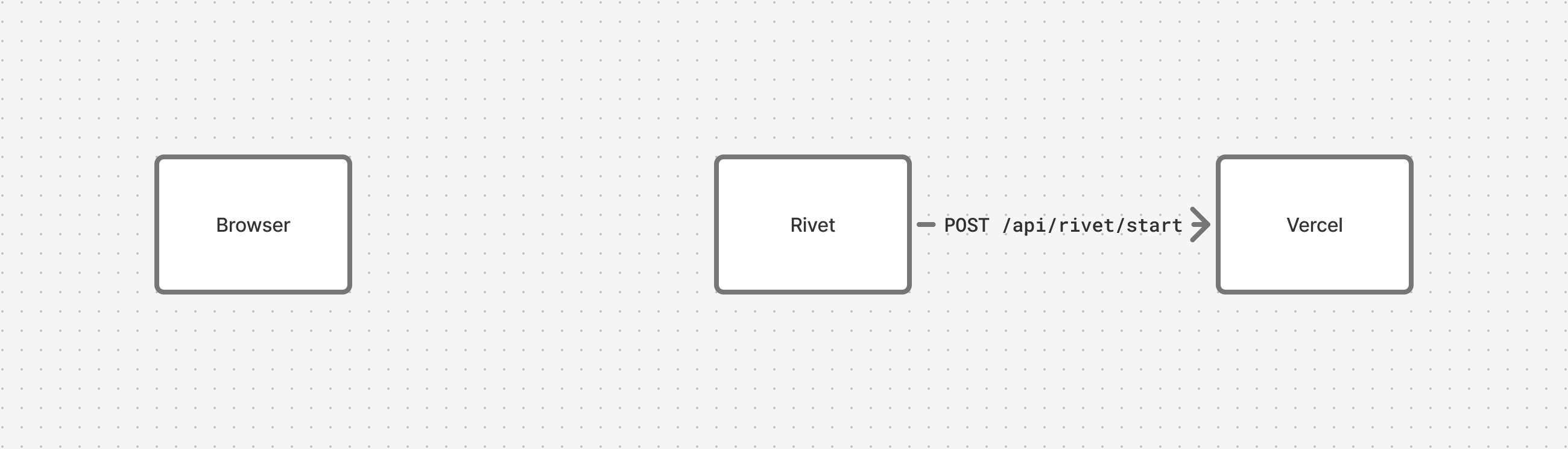

Step 2: Open runner request

To start the server on Vercel, Rivet sends a long-lived request to POST /api/rivet/start that remains open until the function timeout (300s on Hobby plan as of writing, see documentation). This creates the “server” that will handle the tunnel and serve multiple WebSockets.

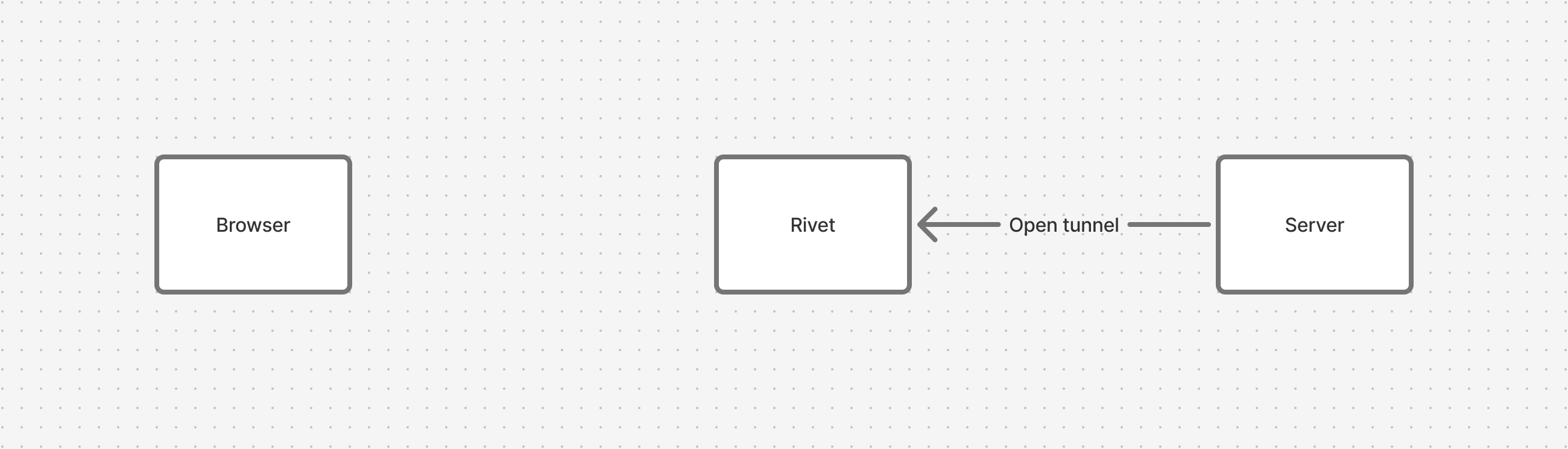

Step 3: Open tunnel from Vercel

Once the runner request starts, it will open a tunnel to Rivet. This tunnel will be used to receive all WebSocket events and future WebSocket connections.

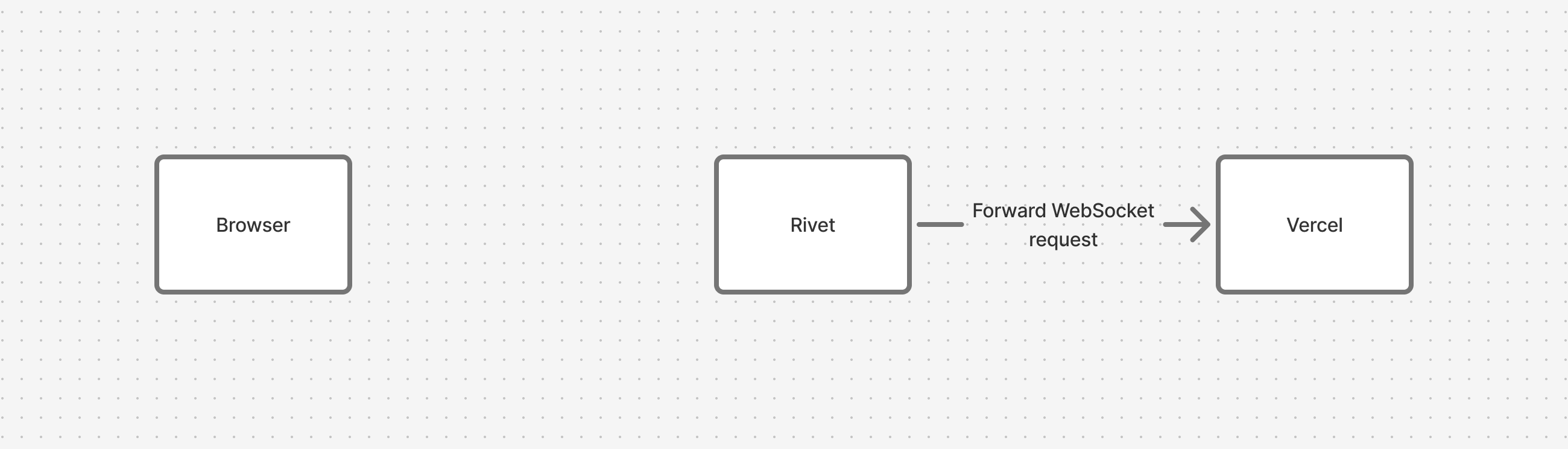

Step 4: Forward WebSocket request

Now that the tunnel is open, we can forward the WebSocket request to Vercel via the tunnel.

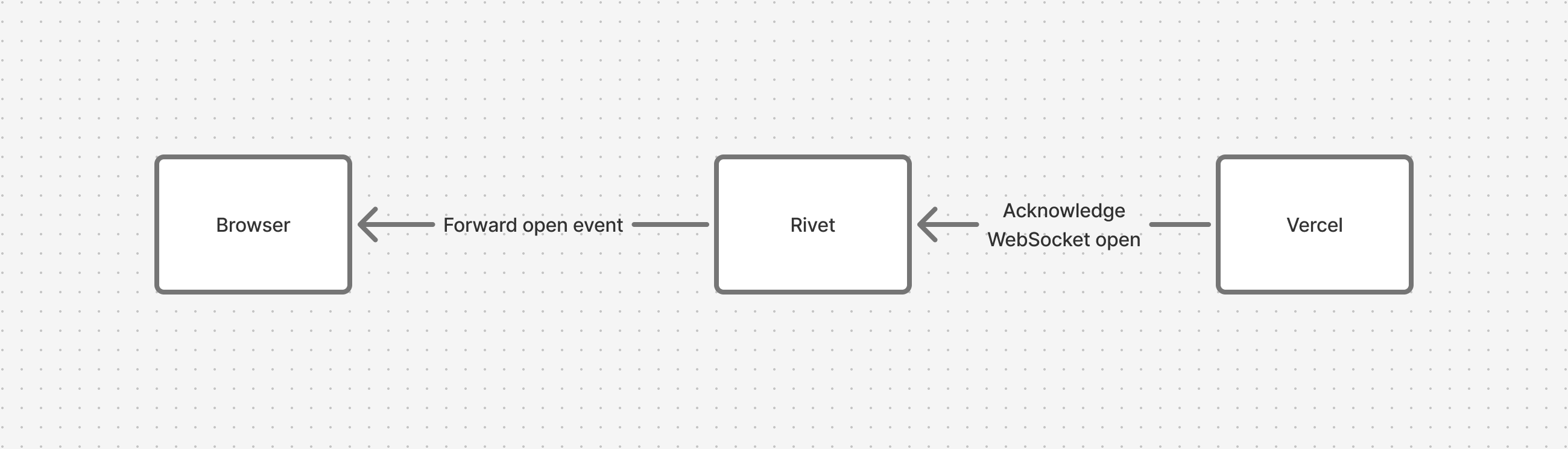

Step 5: Send request response

The Vercel Function will acknowledge the WebSocket as opened.

Now, WebSocket events can be sent both from the browser and Vercel over the WebSocket.

Multiplayer and multiple WebSockets per server

So far we’ve shown how a single WebSocket connects to a Vercel Function. But the real power comes from having multiple WebSockets connect to the same actor, enabling multiplayer logic for collaborative documents, multiplayer games, local-first sync (multiple devices), pub/sub servers, and more.

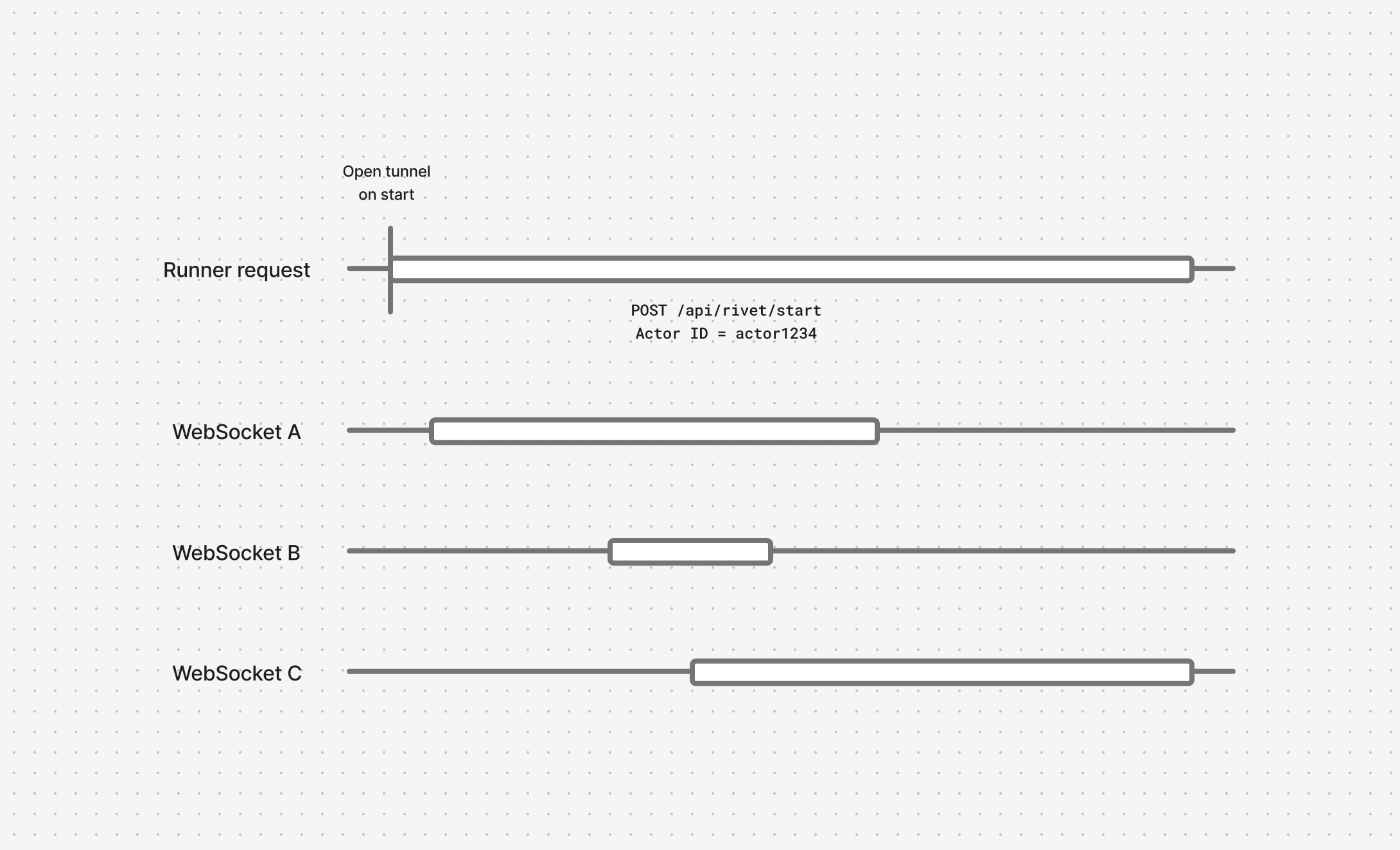

This is where actor keys come in. Each actor has a unique string identifier that’s globally unique across all edge regions. When multiple clients connect with the same actor key, Rivet routes all their WebSocket connections to the same Vercel Function instance. This means all those clients share the same running code and state, i.e. they’re all in the same “lobby.”

Here’s how it works: when a new WebSocket wants to connect to an existing actor, Rivet repeats steps 4 & 5 (forwarding the WebSocket request through the tunnel and getting acknowledgment from the Vercel Function). Each new connection gets routed through the same tunnel to the same function instance.

See how a single runner request (POST /api/rivet/start) can now serve multiple WebSockets:

Timeouts and failover

There’s one huge issue we haven’t talked about yet: Vercel Functions have a timeout. On the Hobby plan, you cannot run functions for more than 300 seconds (as of writing).

That means that WebSockets would be terminated frequently, which defeats the whole point of WebSockets in the first place.

If you’re building on top of Rivet, all you need to know is you don’t need to worry about it. Write your code like it will run forever, we’ll handle the rest.

For the curious: Rivet’s fault tolerant & durable state architecture meant timeout support worked seamlessly out of the box — no special handling required.

Rivet Actors can survive any sort of interruption, including:

- Function timeouts

- Hard crashes

- Code upgrades

This is achieved by storing durable state in ctx.state (state for the whole actor). Modifications to the state are automatically replicated for failover. Ephemeral variables (such as database connections) can be stored in ctx.vars and will not be migrated to the new actor.

When it’s time to migrate an actor to a new Vercel Function invocation because of a timeout, Rivet coordinates stopping the actor on the old function and starting it on the new one with the same state. The Rivet gateway will start forwarding all WebSocket traffic to the tunnel without terminating the browser’s WebSocket connection. Clients will not see an interruption in their WebSockets nor state of the application.

Graceful WebSocket migration works seamlessly with the high-level actions + events API. For raw WebSocket handlers, full support will come with WebSocket hibernation (read more under Future Work below), due to complexities with managing open & close events during migrations.

Edge networking & multi-region routing

There’s one last critical piece to the puzzle: supporting multiple regions.

Vercel has an extensive edge network of 19 compute-capable regions (at the time of writing) for serving low-latency requests to all of your users. Not utilizing this network for low-latency realtime traffic would be a tragedy.

As discussed earlier, actor keys are globally unique across all regions. When you create an actor

with key "foo", it’s automatically created in the nearest region to you (unless otherwise configured). All subsequent

connections to "foo" are routed to that region through Rivet’s global edge network, which resolves the region that

owns the actor and forwards traffic accordingly.

You can nerd out about how we implemented globally unique keys with ~200 ms RTT by reading the slides from my conference talk. We’ll be doing a full writeup on this soon.

Actor sleeping & auto-waking

What happens when no more WebSockets are connected to an actor? Actors have a sleep timeout which configures how long they stay awake with no connections before terminating.

Just like with timeouts and crashes, sleeping actors rely on the same durable state mechanism (ctx.state) to preserve their state across sleep/wake cycles.

When a new WebSocket is opened to the actor, the state is automatically restored as if it never went to sleep.

This means that building stateful applications like AI agents, collaborative documents, or turn-based games are simple on Rivet: you can write your code as if your actor will run indefinitely and Rivet will handle automatically sleeping & waking your actor for you to save compute.

Local development with Next.js

Getting started with Rivet on Next.js is simple: just npm install @rivetkit/next-js and run next dev. Everything

works out of the box as a vanilla NPM library — no separate CLI to install, no cloud signup required, and no extra dev

commands.

Making this work specifically for Next.js was tricky: in standalone Node.js applications, RivetKit runs as a pure JavaScript library with a single persistent object managing development server state. But Next.js dev mode is completely stateless — there’s no way to maintain global state for our dev server between function calls.

To solve this, we transparently spawn Rivet Engine as a child process from the NPM package. We put a lot of work into making the Rivet Engine a lightweight, self-contained Rust binary designed with no external service requirements. This made it easy to transparently run the Engine for Next.js development.

In production, Rivet Cloud (or your self-hosted Rivet Engine) handles calling Vercel Functions and managing state. In development, the child process fills the same role.

When running next dev, visit http://127.0.0.1:6420 to access the full Rivet Engine dashboard — the same powerful observability tools you get on Rivet Cloud.

Fluid Compute’s unique advantages for actors

When we started building Rivet Actors, Vercel was not even a consideration. It seemed like the actor model wouldn’t fit the stateless architecture, and I was skeptical that stateless functions would be too limited because of timeouts, lack of WebSockets, and concerns about whether HTTP/2 multiplexing could efficiently handle the high volume of requests needed to spawn many actors (since each actor requires its own function invocation).

Some time while planning our v2.0, I sketched out an idea on how we could support Vercel, but it seemed like a crazy pipe dream not worth pursuing. But the idea kept gnawing at us, so we time-blocked a couple of days to implement an MVP and see how it worked. And it worked phenomenally. Rivet’s support for tunneling and fault tolerance made all of our original concerns with stateless a complete non-issue.

Beyond just solving those initial concerns, running actors on Fluid Compute revealed unexpected advantages that traditional long-running server architectures cannot replicate:

- Cost-efficient scaling: Only pay for active CPU time, not idle compute. This makes both idle actors (background notifications, turn-based games) and burst workloads cost-efficient — you can scale to thousands of actors during peak traffic without paying for capacity during off-peak times.

- 19 edge regions: Realtime apps are highly latency sensitive, so having an extensive edge network lets us bring latency down for your users.

- Observability: Because 1 Function = 1 Actor, it’s really easy to debug actors. On other platforms, you’ll have to filter logs by an actor ID and make sure all logs correctly include the actor ID. On Vercel, logs are aggregated by function call/actor automatically.

- Preview deployments: Expect to see a deeper preview deployment integration with Rivet. Each preview branch costs $0 when idle because of Fluid Compute.

- 1-click templates: Deploying a Rivet app to Vercel from our dashboard is incredibly fast. Vercel templates handle all the fussing around with Git and deployments for you that we’d normally have to write extensive docs for.

- Bun runtime (coming soon to Fluid Compute): Any rudimentary benchmark we’ve ran on Node.js vs Bun for Rivet shows Bun crushing Node.js. WebSockets are performance sensitive, and Bun support on Fluid Compute is going to give a massive performance boost for free.

Future work: WebSocket hibernation

We have a big improvement for WebSockets in the pipeline: WebSocket hibernation. WebSocket hibernation lets long-lived WebSockets stay open and allow the actor to go to sleep. Currently, actors must be running for all active WebSocket connections.

This works by holding the WebSocket open at the Rivet gateway while the Vercel Function terminates. When a message arrives on a hibernated WebSocket, Rivet wakes the actor in a new function invocation and forwards the message through.

This is useful for use cases like notifications, idle chats, and turn-based games where bi-directional realtime is important but you don’t need to have actors running when the WebSocket is doing nothing.

Bonus: Actors can do a lot more than just WebSockets

This blog post is hyper-focused on WebSockets. But actors can do a lot more:

- Scheduling: Think

setTimeoutbut if the timeout can be infinitely large. Your actor will go to sleep until the timeout triggers. - State: Durable storage for your JavaScript object that automatically persists across migrations.

- Long-running jobs: Actors can run jobs longer than the Vercel function timeout — including long-running AI agents — by transparently migrating between function invocations.

- Background jobs: Rivet Actors are great for firing off requests in the background that don’t need a client connected to handle the requests for you

- Bun support: Bun support is coming to Vercel Fluid Compute. Rivet is ready, and it’s going to make a huge impact on performance.

FAQ

Why not SSE + pub/sub on Vercel Functions?

SSE is commonly used for streaming data from the server to the client. On serverless platforms like Vercel, SSE is typically implemented with pub/sub + stateless functions.

This approach has a few issues that Rivet’s actor model solves:

- No state coordination: SSE on serverless platforms typically relies on pub/sub + stateless functions, which means no single instance “owns” the state for a room or session. Most realtime use cases (collaborative docs, local-first sync, multiplayer games) require an authoritative state that coordinates all clients. With SSE, managing state requires a database/cache, which introduces complex race conditions on concurrent reads/writes. Rivet actors solve this by giving each room a single stateful instance that all clients connect to.

- Timeouts: SSE streams still hit the 300s function timeout and must reconnect. Rivet gracefully migrates WebSocket connections to survive indefinitely.

- Bi-directional communication: SSE is only for streaming events from the server, which limits use cases significantly. WebSockets can send events from both the server and the client.

- Proxy issues: Many proxies don’t treat

text/event-streamMIME type correctly and will buffer responses, therefore making realtime events delayed or not work at all. WebSockets are usually handled correctly by proxies. - Requires pub/sub server: SSE implementations usually rely on a pub/sub server for broadcasting events. This is suitable for simple use cases like notifications.

- Binary encoding: SSE cannot natively encode binary data. Binary data must be base64 encoded, which increases payload size by ~33%. Not suitable for realtime applications with binary payloads.

Rivet also supports SSE out of the box by setting transport to "sse" in createClient/createRivetKit.

Why are there no native WebSockets on Vercel?

I’ve worked on platforms that allow developers to run game servers using WebSockets, TCP, and UDP on our infrastructure. I don’t work at Vercel, but I can take a guess from past experience:

As an infrastructure provider, we need to update our servers frequently, which usually means terminating all active connections (including WebSockets).

At Rivet, we’re able to help provide WebSockets on Vercel because of two core components:

- We are careful to handle gracefully migrating our load balancers that manage active WebSocket connections as to not terminate WebSockets abruptly

- Actor’s durable state & fault tolerance lets actors transparently outlive Vercel’s function timeouts